F5 Distributed Cloud Site

Definition of a Site

Site is a physical or cloud location where F5 Nodes are deployed. Site can be a public cloud location like AWS VPC, Azure VNET, GCP VPC, physical datacenter, or an edge location like manufacturing Site, factory, retail store, restaurant, charging stations, robots, etc. Even though F5® Distributed Cloud’s Regional Edges are available as Sites for F5® Distributed Cloud Mesh (Mesh) or F5® Distributed Cloud App Stack (App Stack) services, they are not marked in F5® Distributed Cloud Console (Console) or APIs as “customer Sites”. Our SaaS can manage many Sites for the customer and provide a common set of APIs to consume infrastructure, allowing developers and devops teams to focus on their tooling and applications.

Cluster of Nodes

Site is made up of a cluster of one or more Nodes and the cluster can be scaled up or down based on load by addition or deletion of Nodes. Each Node is a linux-based software appliance (appliance is delivered as ISO or deployment spec on k8s) that is deployed in a virtual machine, in a k8s cluster, commodity hardware, or our edge hardware. Kubernetes is used as clustering technology and all our software services run as k8s workloads on these clusters. In addition, customer workloads also run on this k8s if App Stack services are enabled on these Nodes. This managed k8s on Node is referred to as “physical k8s” from now on.

A physical location may be deployed with multiple clusters of Nodes, each individual cluster is considered as its own individual Site to ease manageability. As a result, an individual Site is a combination of location and cluster. If a physical Site has two clusters then there are two Sites in that location. Henceforward, a Site may be referred to as Site or Location in the rest of the documentation. If the Location is expected to have multiple Sites, it will be made explicit in the documentation when we differ in this assumption.

Network Topology of a Site

A customer Site can be deployed in two modes, from network point of view. Even though a Site supports more than two interfaces, it will be covered in later sections.

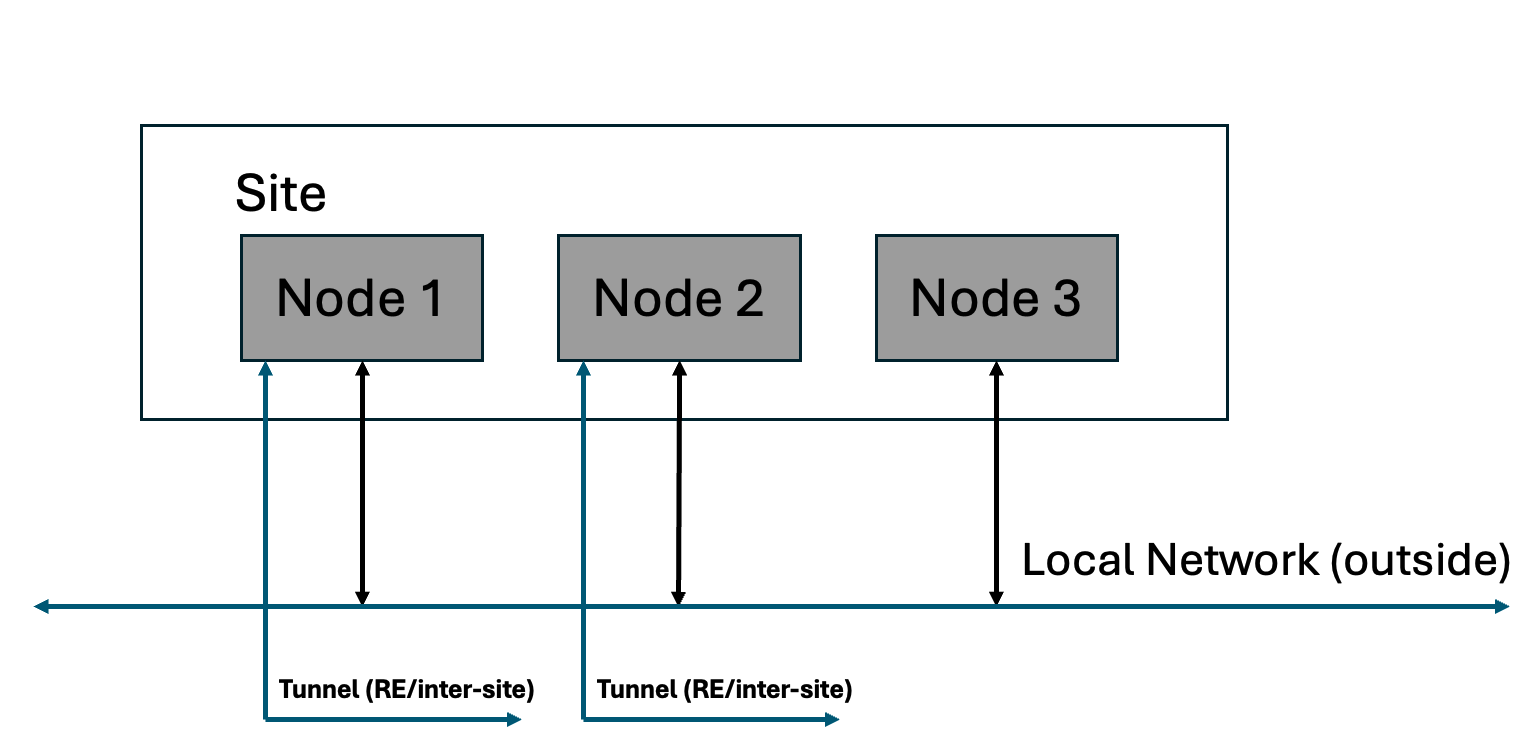

- On a stick (single interface) - In this case, the ingress and egress traffic from the Node is handled on a single network interface as shown in the diagram. Inter-Site traffic goes over the ipsec tunnels (using the REs or optionally Site-to-Site). In this mode, the network functionality delivered will be either load balancer, gateway for Kubernetes, API proxy, a generic proxy, and application security functionality. Local network here refers to local data center network, VPC subnet in AWS, or VNET in Azure, or back-to-back network connection on a cluster of F5 Distributed Cloud hardware.

Figure: Site with Single Interface

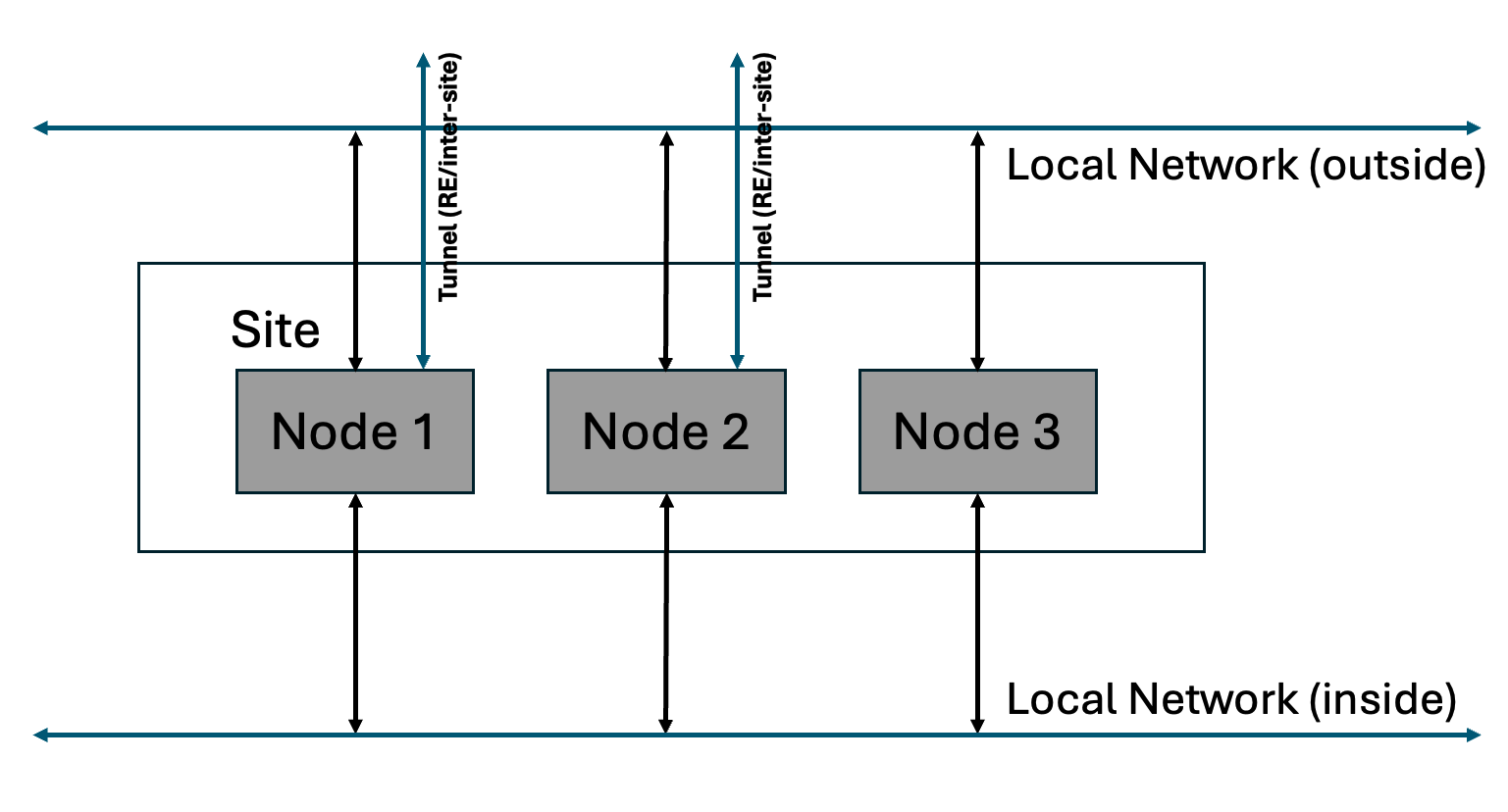

- Default Gateway (two interfaces) - In this case, there are two interfaces and the inter-Site traffic goes over ipsec tunnels that are connected over the local-network (outside). In this mode, as default gateway, the network functionality like routing and firewall between inside and outside networks can be enabled in addition to other application connectivity and security functionality. Local network here refers to local data center network, VPC subnet in AWS, or VNET in Azure, or back-to-back network connection on a cluster of F5 Distributed Cloud hardware.

Figure: Site with Two Interfaces

Logical view of the Site

A Node consists of many software components that provide computing, storage, network, and security services. Cluster comprising of multiple Nodes, with its own physical k8s act as a super-converged infrastructure in use cases to run applications without the need for additional external components/software typically required by other systems.

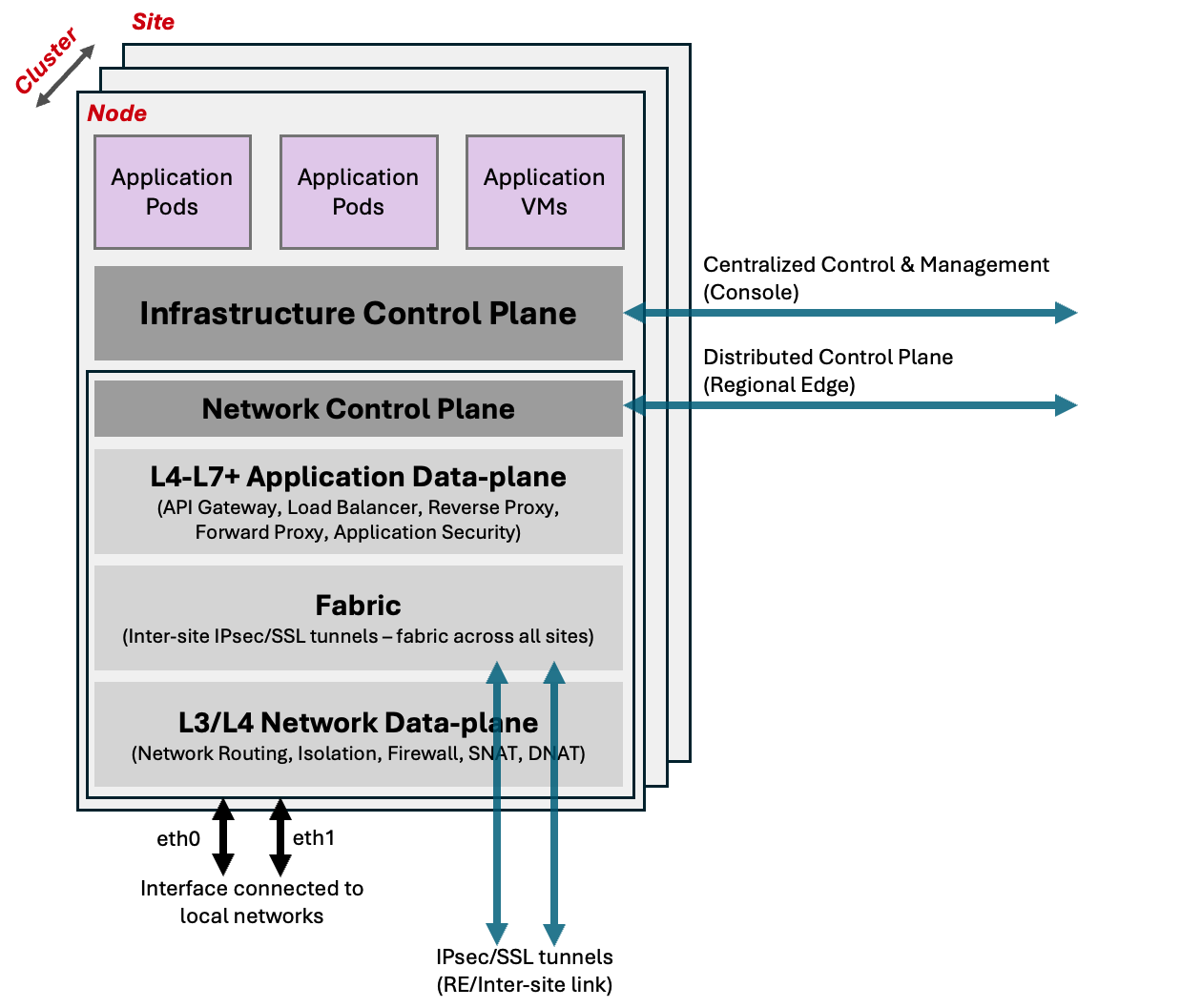

Figure: Logical View of Site

As each Node comes up, it calls home to the F5 Distributed Cloud's centralized control and management plane. Once authenticated and approved by the user, it brings up Infrastructure Control Plane and forms a cluster of Nodes (this includes the physical k8s). Once the control plane service is up and the cluster is formed, it starts deploying F5 Distributed Cloud microservices.

The infrastructure control plane, primarily composed of F5 Distributed Cloud's managed physical k8s, within the Site is responsible for the functioning and health of the Nodes, F5 Distributed Cloud microservices, and customer workloads running within the cluster. Once the registration is complete and the cluster is admitted into the F5 Distributed Cloud Service, the distributed control plane running in the Regional Edge Sites become responsible for launching and managing the workloads in the Site. This distributed control plane is also responsible for aggregating status, logs, metrics from individual Site and propagate it to the F5® Distributed Cloud Console (Console).

One of the microservice in the Site is the networking service that is responsible for all the Mesh services. It gets bootstrap config from a distributed control plane running in regional edges within the F5 Distributed Cloud global infrastructure. This service creates ipsec/ssl tunnels to regional edges (and/or inter-Site) that are configured during bootstrap of the Node.

Using the secure tunnels, this service becomes part of the F5 Distributed Cloud Fabric, an isolated ip network, that helps it to connect to local control plane in regional edges or other Sites in the location. This Fabric is also used for dataplane traffic as underlay fabric. None of the physical interfaces can talk directly to this isolated fabric. Traffic on the fabric is going through a network firewall at every Site and only certain applications are allowed to communicate. It also has protections like reverse path forwarding checks to prevent any kind of spoofing, tenant level checks so that only Sites of same tenant can talk to each other.

Mesh network connectivity and security services includes following functions:

- IP switching/routing

- Isolated Networks for physical interfaces

- Isolated Networks for physical k8s namespace

- Connect networks directly or through source NAT (SNAT)

- Local internet breakout when used in default gateway mode

- Firewall policy - network (TCP, IP) security policy

- BGP routing to advertise VIPs within Site

Mesh application connectivity and security services includes the following functions:

- Application and API security policy

- Forward Proxy

- Load Balancer

- Application policy (based on HTTP, APIs, etc)

- API proxy

- Web Application firewall

Additionally, Regional Edge Sites can provide functionality for customer Sites and applications:

- Expose applications on public Internet and use our global network for services like anycast, global load balancing, application security, volumetric DDoS etc

- Offload network and application connectivity and security services on regional edges

- Tunnel the customer Site to regional edge and use our global network as customer backbone network with complete DMZ functionality

Configuring a Site

Certified Hardware

One of our objectives is to help customers to easily deploy and manage infrastructure in cloud or edge and make it part of their own “logical cloud” spanning multiple cloud providers and/or edge locations.

The initial deployment and admission control is done using zero touch provisioning (ZTP) to make the bring-up process easy and secure. As Nodes can be deployed in multiple configurations across multiple platforms, it requires ZTP with multiple flavours of bootstrap configuration. Initial boot of the device needs to have enough configuration so that device can call home to centralized control plane. As a result, we bundle different bootstrap configurations in the Node software image.

Certified hardware object represents physical hardware or a cloud instance that will be used to instantiate the Node in a given configuration for the Site. It has the following information for bootstrap:

- Type

- List of Vendor model

- List of devices supported (eg. network interfaces)

- Image name

Certified hardware objects are only available in the shared namespace. These are created and managed by F5 Distributed Cloud Services and users are not allowed to configure this object. It lets users know of various hardware and cloud images that are supported, how they can be used, configured at boot strap, and image name that needs to be downloaded to make the config work.

Details on all the parameters that can be configured for this object is covered in the API specification.

Site Registration

In order to register a Site, the Tenant needs to allocate a token as part of the ZTP process. This token can be part of the ISO image that is downloaded for physical Node or inserted into the VM as part of cloud init or given as part of deployment spec on launching on k8s. In the case of ISO, user can request token to be part of the image when downloading it from the Console.

When a Node’s software boots up, it is expected to call home to register. In order for the call home to succeed, there has to be at least one interface up with connectivity to the centralized control and management service - this interface is defined in certified hardware and needs to be appropriately configured at bootstrap. During call home, Node sends a registration request with the token to identify the tenant and any additional information about the Node e.g. certified hardware, hardware info, etc.

This new Site will then show up as a new registration request in the Console. User can approve or deny the registration request and optionally assign various parameters like Site name, geographic location, and labels.

When new Nodes show up for registration, if they have the same Site name, then they will automatically become part of the cluster after approval. An individual Site supports 2n+1 configuration for cluster’s control Nodes - with a minimum of 1 control Node. After 3 Nodes, one can add any number of Nodes and the rest will become worker Nodes. In a cloud environment, worker Nodes can be automatically scaled without requiring additional registration. This can be based on load or manually changing the number in Site configuration.

This whole registration process can be automated with bulk registration and use of the TPM on physical hardware Node to store crypto certificates and keys that will identify Node, tenant etc. This needs custom integration with customer backend and new certified hardware instance and can be requested through the support channel.

On-a-stick Deployment (Single Interface)

If the Site is being deployed in the single interface (on a stick) mode, determined by the image selected and its configuration, the following things will happen during the deployment process:

-

The single “physical” interface is assigned to be of type

SITE_LOCAL_OUTSIDEby the system automatically and it will have DHCP enabled. Using DHCP, the Node will get its IP address, subnet, default gateway, and DNS server configuration. Static IP configuration will be supported in the future, as part of our roadmap. -

This Interface is also configured to be part of a virtual network which is of type

SITE_LOCAL_NETWORK. This information will be relevant later for configuring additional features for the Site, for example - network firewall, etc. -

IP address of this interface will be called

HOST_IPinSITE_LOCAL_NETWORK- this address is the one that is assigned to the interface. This information will be relevant later when the system automatically selects VIP for this Site.

Default Gateway Deployment (Two or more Interfaces)

If the Site is being deployed in the default gateway (two interface) mode, determined by the image selected and its configuration, the following things will happen during the deployment process:

-

The first interface is assigned to be of type

SITE_LOCAL_OUTSIDEby the system automatically and it will have DHCP enabled. Using DHCP, the Node gets its IP address, subnet, default gateway and DNS server configuration. Static IP configuration will be supported in the future, as part of our roadmap. -

This Interface is also configured to be part of a virtual network which is assigned to be of type

SITE_LOCAL_NETWORK. This information will be relevant later for configuring additional features for the Site, for example - network firewall, etc. -

Second interface is assigned to be of type

SITE_LOCAL_INSIDEand usually does not have any configuration. User can enable DHCP or assign a static IP address to this interface. -

This second interface is also configured to be part of a virtual network, which is assigned to be of type

SITE_LOCAL_NETWORK_INSIDE. If DHCP server is configured for this network, this Site becomes default gateway forSITE_LOCAL_NETWORK_INSIDEnetwork and assigns IP addresses to any client on the network. -

IP address of both interfaces will be called

HOST_IPin their respective networks -SITE_LOCAL_NETWORK, andSITE_LOCAL_NETWORK_INSIDE.

VIP for a Cluster of Multiple Nodes

As discussed earlier, the VIP is chosen from the interface HOST_IP. However, in the case of multiple Nodes, there are multiple interface IPs. This may not be desired in many cases and to solve this problem, one can configure a common VIP on the inside and outside network in the Site object. Whenever there is a need to select VIPs automatically, this VIP will be preferred over HOST_IP.

Details on all the parameters that can be configured for this object is covered in the API specification.

Site Connectivity to Regional Edge

When a Site is approved, the system will automatically select two regional edge Sites based on public ip address with which Site registration was received by the centralized control plane. In some scenarios, Geo-IP database may not be accurate and users can override the selected regional edges and provide “ves.io/ves-io-region” label to explicitly provide RE preference. System will connect to two regional edges from this label and if they are not available, it will connect to other nearby Sites in the region.

Once these REs are selected, the list of two selected regional edges is passed down to the Site as part of the registration approval. Also, the following additional information is sent as part of this approval:

- Site identity and PKI certificates - these certificates are regularly rotated by the system

- Initial configuration to create and negotiated secure tunnels for the F5 Distributed Cloud fabric.

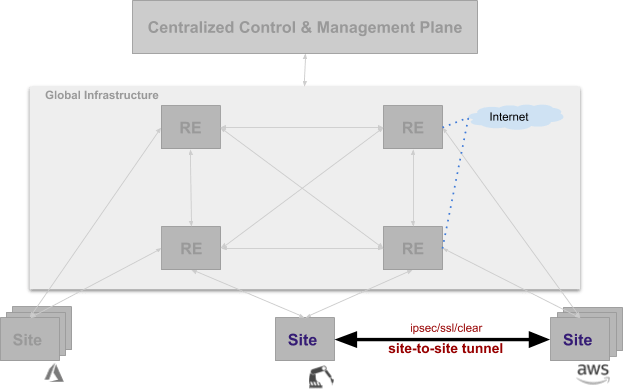

The Site will try to negotiate both ipsec and ssl tunnels to the selected regional edge Sites. If ipsec is able to establish connectivity, it will prefer to use ipsec, otherwise it will resort to ssl tunnel. Once the connectivity is established, the Fabric will come up and distributed control plane in the regional edge Site will take over the control of the Site. These tunnels are used for both management, control, and data traffic. Site to Site traffic and Site to public traffic goes over these tunnels.

Site to Site Connectivity

In many cases, tenants may need to connect “Sites” that are located within the same location using their existing networks or “Sites” across locations using their own backbone network and not use the global network for the connectivity of Sites. Tenants that prefer to utilize their backbone should be able to send data traffic between Sites using their own network instead of ours - this is achieved by creating Site to Site tunnels using Site Mesh Group.

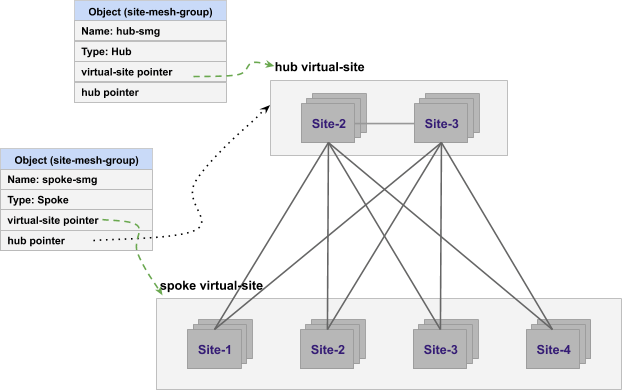

Figure: Connectivity Using Site Mesh Group

Site Mesh Group is configuration object that defines a group of arbitrary Sites, sets up tunnels between those Sites using ipsec or ssl (clear/unencrypted tunnels are also possible). If the Site mesh group is HUB, then all Sites within this group will form a full mesh connectivity. If Site mesh group is SPOKE, then it has corresponding HUB Site mesh group. Each Site in SPOKE group sets up tunnel with all Sites in corresponding HUB group.

Figure: Hub and Spoke Combinations of Site Mesh Group

Details on all the parameters that can be configured for this object is covered in the API specification.

In case of IPsec connection between Site and Regional Edge or between two Sites, both Public Key Infrastructure (PKI) and Pre-Shared-Key (PSK) mode are supported. When using PKI, system supports RSA 2048 certificates to authenticate remote peers. The following ciphers are used:

- Key Exchange: aes256-aes192-aes128, aes256gcm16

- Authentication: sha256-sha384

- DH groups: ecp256-ecp384-ecp521-modp3072-modp4096-modp6144-modp8192

- ESP: aes256gcm16-aes256/sha256-sha384

Site - Local Internet Breakout

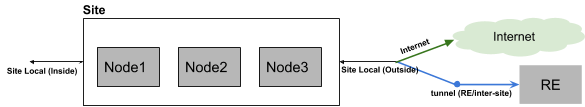

Even though the ideal point of egress traffic to the Internet is from the Regional Edge (RE) Site, it may be required to send some of the traffic directly to the internet. Also, please note that this discussion is only valid in the two interface (default gateway) mode.

Figure: Site Local Network to Internet Connectivity

The virtual network on the inside (Site Local Inside) can be connected to the outside physical network (Site Local) using a network connector that we will discuss in a later section. On this network connector, you can configure SNAT, policy based routing, forward proxy for URL filtering, and network firewall.

Details on all the parameters that can be configured for this object is covered in the API specification.

Virtual Site

A virtual Site is a tool for indirection. Instead of doing configuration on each Site, it allows for performing a given configuration on set (or group) of Sites. Virtual Site is a configuration object that defines the Sites that members of the set.

Set of Sites in the virtual Site is defined by label expression. So we can have a virtual Site of all Sites that have “deployment in (production) and region in (sf-bay-area)”. This expression will all production Sites in sf-bay-area.

Virtual Site object is used in Site mesh group, virtual Site is used in application deployment, advertise policy or service discovery of endpoints. These label expressions can create intersecting subsets, Hence a given Site is allowed to belong many virtual Sites.

Concept of “where”

Technically an endpoint (for example, DNS server, k8s API or any such name to be resolved) should resolve finally to virtual network and IP address. Since it is not always convenient or even possible to provide a virtual network and an ip address, we provide the capability of defining “where” in many API(s). This concept of “where” makes it easier to perform configuration and policy definition upfront.

Value of “where” can be any one of the following:

- Virtual Site: configuration applied “where” all Sites selected by virtual Site and Site local network type on that Site. Name will be resolved there or discovery will done be on all these Sites.

- Virtual Site, network type: configuration applied to “where” all Sites selected by virtual Site and network type on those Sites.

- Site, network type: configuration is applied on this specific Site and virtual network of given type.

- Virtual network: configuration is applied to all Sites “where” this network is present.

Fleet

Typically, when you deploy a large number of Sites, it is common to perform same configuration steps on each Site (using automation or manually) - for example configuration on physical constructs (on the Site) like interfaces, virtual networks, network firewalls, etc. In order to remove the need for performing configuration on each Site individually, we provide the capability to manage a set of Sites as a group.

Since virtual-Site object allows an individual Site to belong to multiple virtual Sites, this object is not ideal for performing configuration on physical constructs like interfaces - as it can cause ambiguation problems if two virtual Site objects contain different configurations for a single physical entity.

In order to solve this disambiguation problem of configuration to physical constructs, we provide the capability to manage Sites with “fleet” object. This set has to be exclusive and the same Site cannot be present in two “fleet” objects.

Configuring a Fleet

In order to configure a “fleet” object, you need to define a label on the object ves.io/fleet=<fleet label value>. Every fleet in a tenant has to have unique value of <fleet label value>. If this label is attached to Site, then the Site becomes part of that fleet. Label can be attached at the time of registration approval to get proper configuration for physical devices. A virtual Site representing this fleet is also created by the system automatically so that it can be used in other features where there is a need for virtual Site.

Fleet is also tied to certified hardware to map physical devices that are supported by the hardware. It has device-level configuration for each device - for example, interface configuration for each network device, firewall configuration for these Sites, USB configuration, I/O devices, storage devices, etc. Once the interfaces are defined as part of the fleet, then they become members of virtual network. Virtual networks may have connectors etc. In this way physical Site configuration can be done as fleet.

Since there is a lot of configuration that is tied to a fleet, a design tradeoff was made that even one individual Site should be managed as fleet. This means that one needs to always configure fleet when using features tied to fleet. This makes it easier to add additional members in the future without requiring changes to the initial Site. Fleet object, by assigning fleet label to Site, may be assigned to Site at the time of Site registration.

Details on all the parameters that can be configured for this object is covered in the API specification.

On this page:

- Definition of a Site

- Cluster of Nodes

- Network Topology of a Site

- Logical view of the Site

- Configuring a Site

- Certified Hardware

- Site Registration

- On-a-stick Deployment (Single Interface)

- Default Gateway Deployment (Two or more Interfaces)

- VIP for a Cluster of Multiple Nodes

- Site Connectivity to Regional Edge

- Site to Site Connectivity

- Site - Local Internet Breakout

- Virtual Site

- Concept of “where”

- Fleet

- Configuring a Fleet