Troubleshooting Guide for Secure Mesh Site v2 Deployments

Objective

The purpose of this guide is to provide guidelines for troubleshooting issues pertaining to Distributed Cloud Secure Mesh Sites v2, specifically around registration and provisioning.

Prerequisites

The following prerequisites apply:

-

A Distributed Cloud Services Account. If you do not have an account, see Getting Started with Console.

-

Gain insights into the resources required for the CE nodes in terms of CPU and memory by going through the Customer Edge Site Sizing Reference.

-

Ensure that the general documentation steps around creating a Secure Mesh Site v2 as well as the infrastructure provider-specific documentation are followed.

-

Ensure the site is configured with the Site Local Outside (SLO) interface initially with the ability to access the public Internet.

-

Verify the CE node(s) can access the F5 Registration/proxy endpoint on SLO interface. You can find the registration endpoint info by entering the generated site token into any JWT decoder, like jwt.io.

-

Verify the CE node(s) can access the public IP addresses of F5 Regional Edges (REs) through the SLO interface referenced at Firewall and Proxy Server Allowlist Reference.

-

Ensure that mandatory information (such as token, DNS, SLO IP, gateway, and more) is accurate semantically as mentioned in the how-to deployment guides for your provider.

-

Tokens checked out from the Distributed Cloud Console are valid for 24 hours. Check the validity of the token by using any JWT decoder, like jwt.io. Create a new token if the old token is expired.

Overview

The new Secure Mesh Site v2 Customer Edge (CE) bring-up process is streamlined for all the providers environments with the objective of seamlessly bringing up the nodes for a given site/provider with all the information needed to bootstrap the node/site and register the nodes with Distributed Cloud Console. However, there are unfortunate situations where the user provides the wrong information or there are other external factors that prevent the site from being provisioned as expected. This guide is aimed at tackling common problems to help customers troubleshoot the underlying issue and resolve issues about site registration.

Troubleshooting tools for Site Registration/Provisioning

Site Dashboard Tools

This section introduces the tools that are used to troubleshoot the different scenarios. The Distributed Cloud Site dashboard has a per-site tools section that can be used to troubleshoot site nodes.

Figure: Site Troubleshooting Tools

Unfortunately, these UI tools only work when the site is connected to the F5 Distributed Cloud. During the pre-connectivity phase, it is highly recommended to directly connect to the node via SiteCLI, which can be accessed over SSH, VNC, or Console (depending on the environment).

Node SiteCLI Tools

SiteCLI is a command-line interface (CLI) tool available directly on the node. Log in to the node via SSH or Console. Once logged in, there are several commands, like health and diagnosis at your disposal to troubleshoot the node.

For public cloud providers, the default username is admin. Authentication can be done using an SSH key or a custom password, which you can configure from Distributed Cloud Console.

For non-cloud providers, the default username is admin and the password is Volterra123.

Observe Node VPM Status and Logs

VPM (Volterra Platform Manager) is an agent on the node providing configuration of services (Kubernetes and F5 Distributed Cloud services) and operating system. As the main software component of the node software, it is responsible for node provisioning. Observing and analyzing the VPM logs is critical to identifying the root cause of a problem.

Check VPM Status

To check VPM status, use following command from the SiteCLI:

execcli systemctl-status-vpm

This command provides software, OS, cluster, registry connectivity, and other useful troubleshooting info:

status vpm

Observe VPM Logs

The VPM logs can be accessed using two methods: SiteCLI and site UI in Console.

execcli journalctl -fu vpm

You can use your browser to connect to the node, as shown below. Note that port 65500 needs to be open to connect to the site local UI. For example, https://[node IP]:65500. Navigate to Dashboard > Tools > Show Log > Volterra Platform Manager.

Common Site Registration Failure Scenarios During Site Deployment

This section details registration failure scenarios and corresponding troubleshooting steps you can perform during site deployment: The registration and provisioning phases. You can identify this class of failures by observing the site's status as Waiting for Registration. In this case, we can assume that node was created but no change for the Site Admin State.

Figure: Site Admin State

Node Provisioning User Input (cloud-init or VM Scripts)

During provisioning of the node, and depending on the providers like all cloud and certain non-cloud providers, it is required that you pass site node parameters (like cloud-init or scripts). If there are errors associated with the format or any incorrect information, like token, SLO, DNS, or custom proxy information, it can hinder VPM’s ability to reach this critical information needed to bootstrap the node.

Symptoms

VPM logs indicating failure of cloud-init bootstrap information. In this case, the /etc/vpm/user_data file was not created or has incorrect semantics.

Aug 13 06:40:30 node-0 vpm[2443]: register.go:299: Failed registration: Failed config validation: Token is missing, waiting for Token to be provided via REST API url:65001/ves.io.vpm/introspect/write/ves.io.vpm.config/update

Aug 21 11:02:11 node-1 vpm[5725]: Error: cannot read file for rSeries, token: , err: cannot unmarshal /etc/vpm/user_data, err: yaml: line 2: mapping values are not allowed in this context

Aug 21 11:02:11 node-1 vpm[5725]: Vpmd command error: cannot read file for rSeries, token: , err: cannot unmarshal /etc/vpm/user_data, err: yaml: line 2: mapping values are not allowed in this context

Aug 21 11:02:11 node-1 vpm[5725]: Usage:

Aug 21 11:02:11 node-1 vpm[5725]: vpmd [flags]

Aug 21 11:02:11 node-1 vpm[5725]: vpmd [command]

Aug 21 11:02:11 node-1 vpm[5725]: Available Commands:

Aug 21 11:02:11 node-1 vpm[5725]: completion Generate the autocompletion script for the specified shell

Aug 21 11:02:11 node-1 vpm[5725]: defaults Prints default daemon configuration

Aug 21 11:02:11 node-1 vpm[5725]: help Help about any command

Aug 21 11:02:11 node-1 vpm[5725]: unit Prints systemd unit file

Aug 21 11:02:11 node-1 vpm[5725]: version Display version

Aug 21 11:02:11 node-1 vpm[5725]: Flags:

Aug 21 11:02:11 node-1 vpm[5725]: --config string configuration file (default "/etc/vpm/config.yaml")

Aug 21 11:02:11 node-1 vpm[5725]: -h, --help help for vpmd

Aug 21 11:02:11 node-1 vpm[5725]: --initial-backup Create initial backup before daemon starts (default true)

Aug 21 11:02:11 node-1 vpm[5725]: --test-start Test daemon by starting and terminating immediately

Aug 21 11:02:11 node-1 vpm[5725]: Use "vpmd [command] --help" for more information about a command.

Aug 21 11:02:11 node-1 systemd[1]: vpm.service: Main process exited, code=exited, status=1/FAILURE

Aug 21 11:02:11 node-1 systemd[1]: vpm.service: Failed with result 'exit-code'.

Resolution

Ensure cloud-init format/semantics are correct as indicated in the environment-specific provisioning user guides.

Invalid Token Presented By Node During Authentication/Registration

Symptoms

When a user needs to register a site, they need to download from the Console an authenticated and encoded token. The validity of this token to be used for registration is 24 hours. Incorrect or expired tokens can result in the following errors in VPM logs.

execcli journalctl -fu vpm

[...]

Aug 09 09:14:57 node-4a5a0 vpm[2442]: client.go:181: Sending registration request to https://register.staging.volterra.us/registerBootstrap

Aug 09 09:14:57 node-4a5a0 vpm[2442]: client.go:153: Client is using proxy &InternetProxy{HttpProxy:http://159.60.140.193:443,HttpsProxy:http://159.60.140.193:443,NoProxy:10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,100.127.0.0/18,100.127.192.0/18,169.254.0.0/16,int.ves.io,int.volterra.us,ProxyCacertUrl:,}

Aug 09 09:14:57 node-4a5a0 vpm[2442]: register.go:767: Registration failed: Registration request: Request Register failed: Response with non-OK status code: 500, content: Unknown token: Token doesn't exist,, retry in 1m5.524906138s

Aug 09 09:14:57 node-4a5a0 vpm[2442]: checker.go:213: Starting check for new workload version, with timeout 40m0s

Aug 09 09:14:57 node-4a5a0 vpm[2442]: checker.go:112: running all cron tasks in another routine

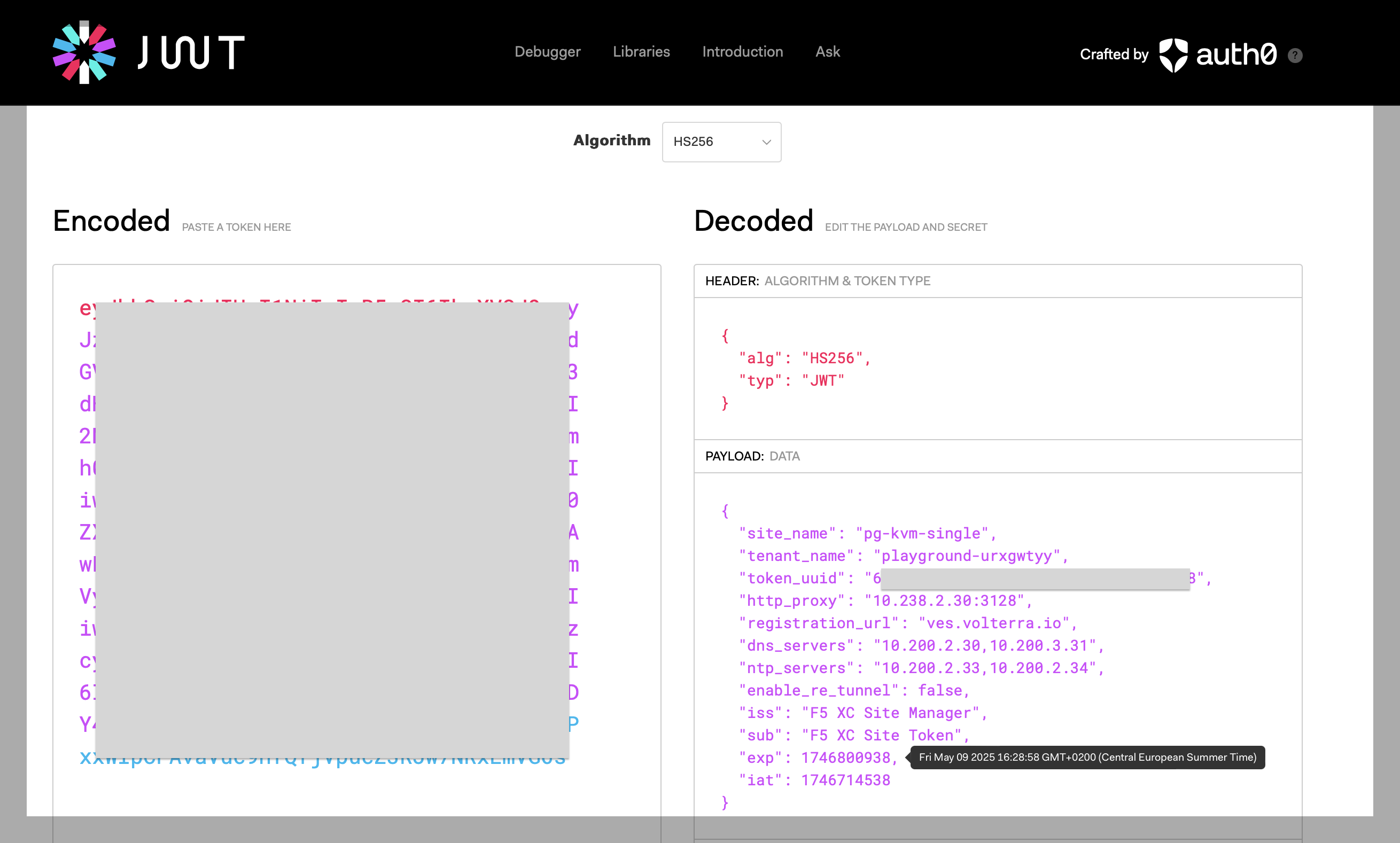

Verify the validity of the token (JWT) being used by inputting token into any JWT online decoder tool, like jwt.io, by inputting the token into the Encoded area and hovering your mouse over the exp field in the payload area as shown below. Additional information about the site name, tenant name, and HTTP endpoints are very helpful to ensure validity and that the public endpoints are accurate for your site.

Figure: JWT Payload

Resolution

If the token validity has passed, use it for node creation.

If there is a logical issue, ensure you generate a new token if validation of the token is not passed. For example, this may include an expired token, wrong site name, proxy configuration, and more.

Figure: Node Token

Node Network Connectivity Issues

Symptoms

If the site is in Waiting for Registration state for a prolonged period (greater than 30 minutes), the issue could be with network connectivity: wrong SLO IP, GW, or DNS. To verify connectivity, log in to the node's SiteCLI terminal and run the following commands:

$$$$$$\ $$\ $$\ $$$$$$\ $$\ $$$$$$\

$$ __$$\ \__| $$ | $$ __$$\ $$ | \_$$ _|

$$ / \__|$$\ $$$$$$\ $$$$$$\ $$ / \__|$$ | $$ |

\$$$$$$\ $$ |\_$$ _| $$ __$$\ $$ | $$ | $$ |

\____$$\ $$ | $$ | $$$$$$$$ |$$ | $$ | $$ |

$$\ $$ |$$ | $$ |$$\ $$ ____|$$ | $$\ $$ | $$ |

\$$$$$$ |$$ | \$$$$ |\$$$$$$$\ \$$$$$$ |$$$$$$$$\ $$$$$$\

\______/ \__| \____/ \_______| \______/ \________|\______|

WELCOME IN SITE CLI

This allows to:

- configure registration information

- factory reset of the Node

- collect debug information for support

Use TAB to select various options.

>>> execcli ping 8.8.8.8

cat: /etc/systemd/system/kubelet.service: No such file or directory

ping: connect: Network is unreachable

>>> execcli ip a

cat: /etc/systemd/system/kubelet.service: No such file or directory

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:ca:15:68 brd ff:ff:ff:ff:ff:ff

altname enp0s3

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:55:7a:c6:28 brd ff:ff:ff:ff:ff:ff

inet 100.64.255.1/29 brd 100.64.255.7 scope global docker0

valid_lft forever preferred_lft forever

If IP connectivity works, check the DNS resolution.

>>> execcli curl-host google.com

<HTML><HEAD><meta http-equiv="content-type" content="text/html;charset=utf-8">

<TITLE>301 Moved</TITLE></HEAD><BODY>

<H1>301 Moved</H1>

The document has moved

<A HREF="http://www.google.com/">here</A>.

</BODY></HTML>

Resolution

Check if the DHCP server exists in the network if DHCP is the chosen method for SLO IP address allocation, and ensure it is able to allocate an IP address for the SLO interface of the node. If you wish to adjust the DNS IP address or adjust IP address allocation method from static to DHCP or vice versa, delete the node and provision a new node with the correct DNS or IP address assignment using cloud-init, scripts, or OVA template depending on the provider.

Symptoms

If other connectivity issues occur, run the diagnosis command.

Resolution

The diagnosis command provides:

- Critical checks for deployment and work domain reachability

- Registration status

- ARP table

- Configured DNS connectivity (ping test)

- DNS resolution (done by curl and dig)

- Gateway connectivity

- Interface information (ifconfig)

- NTP sync status

- Output from /etc/resolv.conf

- Host routing table (route -n)

$$$$$$\ $$\ $$\ $$$$$$\ $$\ $$$$$$\

$$ __$$\ \__| $$ | $$ __$$\ $$ | \_$$ _|

$$ / \__|$$\ $$$$$$\ $$$$$$\ $$ / \__|$$ | $$ |

\$$$$$$\ $$ |\_$$ _| $$ __$$\ $$ | $$ | $$ |

\____$$\ $$ | $$ | $$$$$$$$ |$$ | $$ | $$ |

$$\ $$ |$$ | $$ |$$\ $$ ____|$$ | $$\ $$ | $$ |

\$$$$$$ |$$ | \$$$$ |\$$$$$$$\ \$$$$$$ |$$$$$$$$\ $$$$$$\

\______/ \__| \____/ \_______| \______/ \________|\______|

WELCOME IN SITE CLI

This allows to:

- configure registration information

- factory reset of the Node

- collect debug information for support

Use TAB to select various options.

>>> diagnosis

Node Network Connectivity Issues Toward Public F5 Site Registration Endpoint

With the new Secure Mesh Site v2, all the node communication toward F5 Distributed Cloud for the purposes of registration are provisioning is directed toward a global highly available public proxy hosted by F5 Distributed Cloud to simplify node registration and provisioning process. The proxy IP address is an anycast address that is available globally throughout F5 PoPs. Customer policies or firewall rules may block the communication between the CE to the proxy address.

Symptoms

Check proxy connectivity reachability by finding the HTTP proxy information by decoding the token used for provisioning at jwt.io. On the node's SiteCLI shell, run a quick ping test toward the HTTP proxy IP address and any other public endpoints to verify DNS and proxy reachability. Also observe the VPM logs, as shown below.

The IP address used is for demonstration purposes. The actual IP address for the proxy should be derived from the site token.

$$$$$$\ $$\ $$\ $$$$$$\ $$\ $$$$$$\

$$ __$$\ \__| $$ | $$ __$$\ $$ | \_$$ _|

$$ / \__|$$\ $$$$$$\ $$$$$$\ $$ / \__|$$ | $$ |

\$$$$$$\ $$ |\_$$ _| $$ __$$\ $$ | $$ | $$ |

\____$$\ $$ | $$ | $$$$$$$$ |$$ | $$ | $$ |

$$\ $$ |$$ | $$ |$$\ $$ ____|$$ | $$\ $$ | $$ |

\$$$$$$ |$$ | \$$$$ |\$$$$$$$\ \$$$$$$ |$$$$$$$$\ $$$$$$\

\______/ \__| \____/ \_______| \______/ \________|\______|

WELCOME IN SITE CLI

This allows to:

- configure registration information

- factory reset of the Node

- collect debug information for support

Use TAB to select various options.

>>> >>> execcli curl-host http://159.60.140.193:443

curl: (28) Failed to connect to 159.60.140.193 port 443: Connection timed out

>>> ping 8.8.8.8

--- 8.8.8.8 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max/stddev = 9.51202ms/9.559214ms/9.682389ms/62.34µs

Observe VPM logs with the following log signatures:

execcli journalctl -fu vpm

[...]

Aug 12 12:02:14 node-e6f4d vpm[2433]: client.go:181: Sending registration request to https://register.staging.volterra.us/registerBootstrap

Aug 12 12:02:14 node-e6f4d vpm[2433]: client.go:153: Client is using proxy &InternetProxy{HttpProxy:http://159.60.140.193:443,HttpsProxy:http://159.60.140.193:443,NoProxy:10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,100.127.0.0/18,100.127.192.0/18,169.254.0.0

/16,int.ves.io,int.volterra.us,ProxyCacertUrl:,}

Aug 12 12:02:44 node-e6f4d vpm[2433]: client.go:188: Unable to parse registration error: unexpected end of JSON input

Aug 12 12:02:44 node-e6f4d vpm[2433]: register.go:767: Registration failed: Registration request: Request Register failed: Request error: Post "https://register.staging.volterra.us/registerBootstrap": proxyconnect tcp: dial tcp 159.60.140.193:443: i/o timeout, retry in 1m3.934307169s

Resolution

Check your network firewall to ensure communication to the F5 HTTP Proxy endpoint is allowed on port 443. After adjusting your network and security settings for this connectivity to work, you can trigger a registration via a reboot of the node.

Not Enough CPU/Memory Resources Allocated to Node

Symptoms

Check for node VPM logs to spot not enough memory or CPU log messages.

execcli journalctl -fu vpm

[...]

Aug 08 11:14:16 node-a4124 vpm[2962]: register.go:763: Registration failed: Registration request: Request Register failed: Response with non-OK status code: 500, content: {"code":13,"details":[],"message":"fail to auto approve site 9c439aaa-dc68-4491-8630-a19ee846efd4, err: rpc error: code = FailedPrecondition desc = Registration validation failed: Minimum Memory requirement 14000 is not met, registration has 13000"}, retry in 1m5.203464305s

execcli journalctl -fu vpm

[...]

Aug 07 14:19:07 node-469c2 vpm[2870]: register.go:763: Registration failed: Registration request: Request Register failed: Response with non-OK status code: 500, content: {"code":13,"details":[],"message":"fail to auto approve site 0ada3d48-bb50-45e3-a768-6cb41a4da931, err: rpc error: code = FailedPrecondition desc = Registration validation failed: Minimum CPU requirement 4 is not met, registration has 2"}, retry in 1m5.419818009s

Resolution

Re-provision the node with the minimum requirements, as indicated in the Customer Edge Site Sizing Reference guide: 16 GB RAM or 4 CPU cores.

Connectivity to Public Image Repositories (gcr.io) Needed for Node Provisioning

Symptom

Check the VPM logs to observe signatures indicating the node has challenges downloading images from public Docker repositories due to security requirements that mandate a proxy in your environment.

execcli journalctl -fu vpm

[...]

Aug 09 13:19:53 node-75cc1 systemd[1]: Started VP Manager service.

Aug 09 13:19:53 node-75cc1 vpm[2753]: Unable to find image 'gcr.io/volterraio/vpm@sha256:71f9c31923967be28556d34e8abe6acb8b1d5e4e0600f3b6d2c87421f7bdcded' locally

Aug 09 13:19:53 node-75cc1 vpm[2753]: docker: Error response from daemon: Get "https://gcr.io/v2/": Forbidden.

Aug 09 13:19:53 node-75cc1 vpm[2753]: See 'docker run --help'.

Aug 09 13:19:53 node-75cc1 systemd[1]: vpm.service: Main process exited, code=exited, status=125/n/a

Aug 09 13:19:53 node-75cc1 systemd[1]: vpm.service: Failed with result 'exit-code'.

Resolution (Local Proxy)

Check your local network environment where the node is provisioned to see if a proxy is needed to connect to public endpoints. Re-provision the node if your local environment mandates a proxy for adjusting the scripts to indicate the proxy IP address, as shown below.

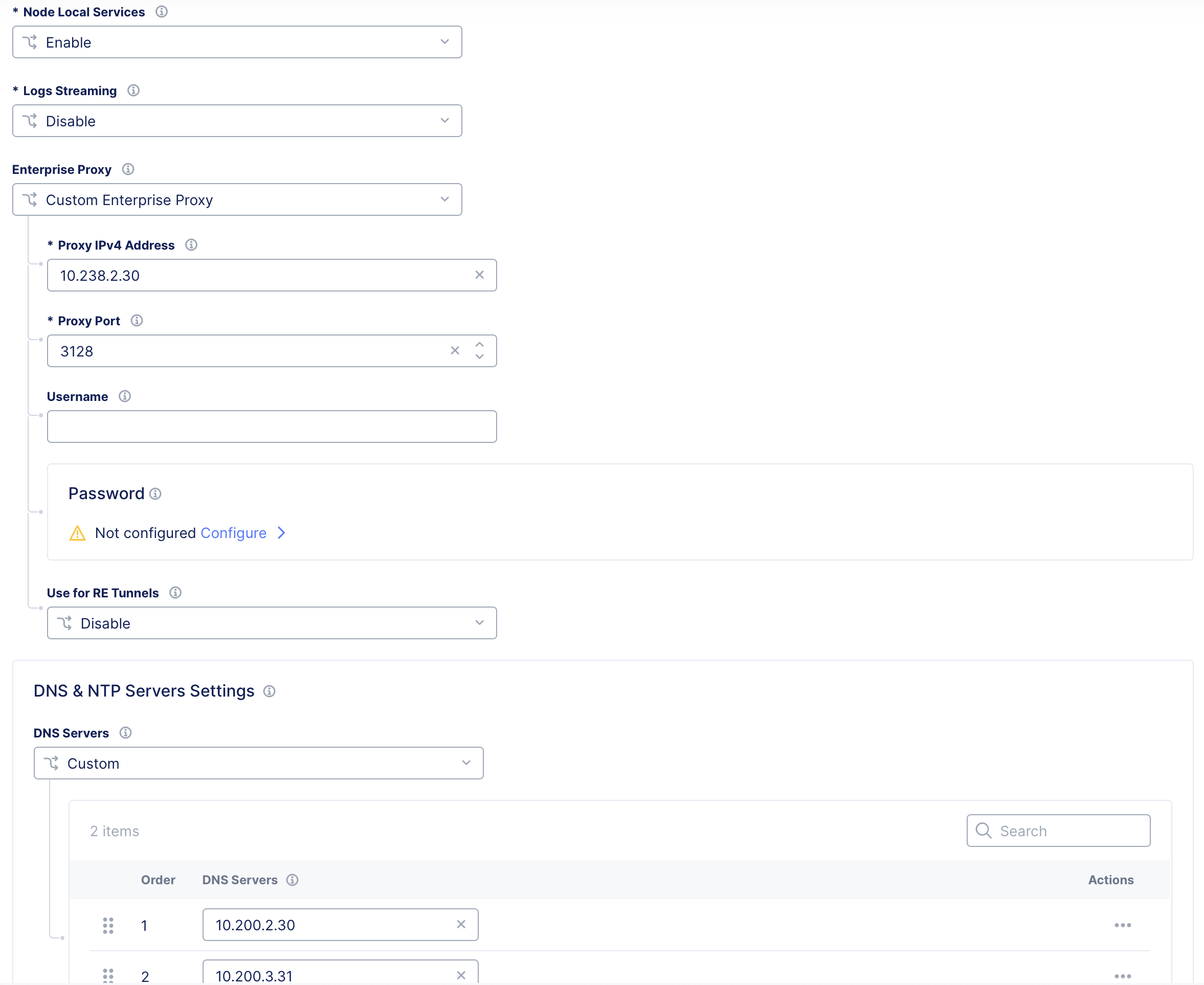

- In Console, navigate to your site and edit the configuration.

- Select the Site Management link from the left menu.

- From the Enterprise Proxy drop-down menu, select Custom Enterprise Proxy.

- Enter your proxy server IP address and port.

- Optionally, configure a username and password.

- Optionally, choose to have your internal proxy server use F5 RE tunnels by choosing Enable from the Use for RE Tunnels drop-down menu.

Important: When Use for RE Tunnels is enabled, the CE site will always establish a connection to Regional Edges using SSL tunnel encapsulation, even if the Regional Edge tunnel type is set to IPSec and SSL. After the site comes online, the tunnel type setting in the Regional Edge section is automatically changed to SSL. When RE tunnels are formed via a custom proxy, IPsec cannot be supported because Internet Key Exchange (IKE), which is UDP-based, cannot be routed via a custom proxy. Therefore, the site setting is changed to disable IPsec and only uses SSL.

Figure: Use for RE Tunnels

Figure: JWT Output Example

Resolution (L3/L7 Firewall)

Configure your L3/L7 firewall to allow domains needed for registration and provisioning. Find the details of the IP addresses and domains that need to be allowed at Firewall and Proxy Server Allowlist Reference.

Common Site Provision Failure Scenarios During Site Deployment

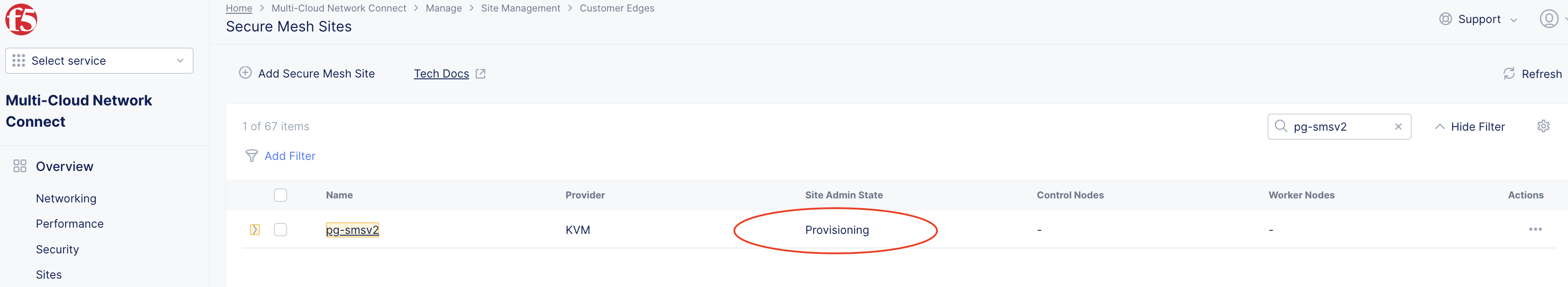

There are situations where the site progresses past registration but gets stuck in Provisioning state for a long time (multiple hours). In this case, we assume that node was created and properly registered, but deployment of the node did not finish successfully.

Figure: Site Status Provisioning

This is a list of potential scenarios that could result in a site being stuck in the Provisioning state:

- Site nodes with hostname duplication issues

- Site nodes with duplicate IP addresses

- Site node tunnels to RE are unhealthy

Site Nodes' Hostname Duplication Issue

All nodes in a given site must have a unique hostname. If a hostname is not provided during the node(s) provisioning workflow, a name will be generated automatically. This case is mainly for situations where the same name was provided multiple times in a cluster.

Symptom

Console prompt will show the same node hostname (for example, node-0) for two or more nodes.

Red Hat Enterprise Linux 9.2024.22.4 (Plow)

Kernel 5.14.0-427.22.1.el9_4.x86_64 on an x86_64

node-0 login:

Upon examining the VPM logs, the error Duplicate Keys for the same node message can be observed. The log below shows that for node-0 multiple keys were found indicating that there were multiple node-0 nodes.

execcli journalctl -fu vpm

[...]

Aug 13 06:09:42 node-0 vpm[2873]: register.go:763: Registration failed: Registration request: Request Register failed: Response with non-OK status code: 409, content: Create: Adding with usage transaction: STM Error: Applying transaction function: txn.AddEntry: AddEntry(ves.io.schema.registration.Object.default)

[…] UniqueSecondaryIndexViolationError(Etcd:/maurice/db/ves.io.schema.registration.Object.default) IndexName: TenantClusterNameHostnameIndex, Value playground-wtppvaog/pg-kvm-cluster-8/node-0, Key: 9d7d2ef1-978f-4030-a766-d9e4c80d0a89, Duplicate Keys: fe7d2bda-3e41-4ef6-a564-32fa2b927bb6,, retry in 1m4.781248518s

Resolution

Re-deploy the cluster with unique nodes names, or do not pass on node name info in cloud-init, scripts, or OVA template. Let the system allocate a unique name for each node.

Site Nodes with Duplicate IP Addresses

Symptom

For nodes that are not able to finish provisioning or SSH login to them is unpredictable, it is highly likely that there is an IP address duplication issue among site nodes on the SLO network. In the VPM logs, various errors indicate potential duplicate IP address issues. For example, a node timeout connects to a proxy as multiple nodes connect using the same node IP address toward the registration/provisioning endpoint. You can confirm this symptom by looking at the VPM logs or by logging into the node to cross-check the node's IP address using SiteCLI shell commands, as shown below.

Aug 13 11:18:17 node-0 vpm[4916]: retry.go:188: Function failed. 50 retries left, err: Request ReportOperatingSystemStatus failed: Request error: Post "https://register-tls.staging.volterra.us/ves.io.maurice.site/reportosstatus": proxyconnect tcp: dial tcp 159.60.140.193:443: i/o timeout

$$$$$$\ $$\ $$\ $$$$$$\ $$\ $$$$$$\

$$ __$$\ \__| $$ | $$ __$$\ $$ | \_$$ _|

$$ / \__|$$\ $$$$$$\ $$$$$$\ $$ / \__|$$ | $$ |

\$$$$$$\ $$ |\_$$ _| $$ __$$\ $$ | $$ | $$ |

\____$$\ $$ | $$ | $$$$$$$$ |$$ | $$ | $$ |

$$\ $$ |$$ | $$ |$$\ $$ ____|$$ | $$\ $$ | $$ |

\$$$$$$ |$$ | \$$$$ |\$$$$$$$\ \$$$$$$ |$$$$$$$$\ $$$$$$\

\______/ \__| \____/ \_______| \______/ \________|\______|

WELCOME IN SITE CLI

This allows to:

- configure registration information

- factory reset of the Node

- collect debug information for support

Use TAB to select various options.

>>> execcli ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:dd:2d:64 brd ff:ff:ff:ff:ff:ff

altname enp0s3

inet 192.168.122.219/24 brd 192.168.122.255 scope global dynamic noprefixroute ens3

valid_lft 3552sec preferred_lft 3552sec

inet6 fe80::5054:ff:fedd:2d64/64 scope link noprefixroute

valid_lft forever preferred_lft forever

Resolution

Shutdown one of the nodes with duplicate IP addresses and re-provision a fresh node with a unique IP address for the SLO. Make sure that the unique IP address is non-responsive to ensure it is not allocated or assigned to any other endpoint on the SLO network.

Site Node Tunnels to RE are Unhealthy

Nodes establish tunnels toward F5 Distributed Cloud Regional Edges (REs) for control plane connectivity as well as the data plane necessary when publishing applications toward the Internet using F5 Distributed Cloud anycast network.

Symptom

In a case of no connectivity to the Internet, the Console UI displays that the tunnel status is Down.

Figure: Site Connectivity Down in Console UI

Alternatively, the tunnel's status and IP addresses can be checked using the SiteCLI shell command status ver.

>>>status ver

[…]

siteTunnelStatus:

siteTunnelStatus:

- ikeTunnelFlapReason: {}

isLocal: true

remoteAddress: 5.182.214.240

role: TUNNEL_INITIATOR

state: TUNNEL_DOWN

url: ny8-nyc.int.volterra.us

verNodeName: node-1e534

[…]

Additionally, the RE endpoints may not be reachable by nodes.

$$$$$$\ $$\ $$\ $$$$$$\ $$\ $$$$$$\

$$ __$$\ \__| $$ | $$ __$$\ $$ | \_$$ _|

$$ / \__|$$\ $$$$$$\ $$$$$$\ $$ / \__|$$ | $$ |

\$$$$$$\ $$ |\_$$ _| $$ __$$\ $$ | $$ | $$ |

\____$$\ $$ | $$ | $$$$$$$$ |$$ | $$ | $$ |

$$\ $$ |$$ | $$ |$$\ $$ ____|$$ | $$\ $$ | $$ |

\$$$$$$ |$$ | \$$$$ |\$$$$$$$\ \$$$$$$ |$$$$$$$$\ $$$$$$\

\______/ \__| \____/ \_______| \______/ \________|\______|

WELCOME IN SITE CLI

This allows to:

- configure registration information

- factory reset of the Node

- collect debug information for support

Use TAB to select various options.

>>> execcli ping 5.182.214.240

PING 5.182.214.240 (5.182.214.240) 56(84) bytes of data.

--- 5.182.214.240 ping statistics ---

8 packets transmitted, 0 received, 100% packet loss, time 8196ms

>>> ping 8.8.8.8

--- 8.8.8.8 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max/stddev = 9.51202ms/9.559214ms/9.682389ms/62.34µs

Resolution

Check if the local network has a blocked port UDP 4500 preventing IPsec tunnels or port TCP 443 when SSL tunnels are chosen by the user during site creation. By default, there is an automatic fallback mechanism that changes the encryption from IPsec to SSL if not configured. Tunneling method is chosen explicitly during site creation workflow. Adjust your network firewall to open appropriate ports/RE IP addresses.

Verify the CE node(s) can access the public IP addresses at Firewall and Proxy Server Allowlist Reference.

Concepts

On this page:

- Objective

- Prerequisites

- Overview

- Troubleshooting tools for Site Registration/Provisioning

- Site Dashboard Tools

- Node SiteCLI Tools

- Observe Node VPM Status and Logs

- Check VPM Status

- Observe VPM Logs

- Common Site Registration Failure Scenarios During Site Deployment

- Node Provisioning User Input (cloud-init or VM Scripts)

- Invalid Token Presented By Node During Authentication/Registration

- Node Network Connectivity Issues

- Node Network Connectivity Issues Toward Public F5 Site Registration Endpoint

- Not Enough CPU/Memory Resources Allocated to Node

- Connectivity to Public Image Repositories (gcr.io) Needed for Node Provisioning

- Common Site Provision Failure Scenarios During Site Deployment

- Site Nodes' Hostname Duplication Issue

- Site Nodes with Duplicate IP Addresses

- Site Node Tunnels to RE are Unhealthy

- Concepts